Virtual reality is no longer a novelty. It reshapes gaming, training, and daily tasks by matching what you see with how you naturally look.

Eye tracking technology reads subtle pupil cues with infrared sensors and smart algorithms. Brands like Tobii, powering systems in PlayStation VR2 and Eye Tracking VR Headset, use this work to bring sharper visuals and faster performance.

That blend of optics, on-device processing, and software intelligence makes scenes feel clearer and interactions more intuitive for users. Foveated rendering and dynamic distortion compensation keep images crisp where attention falls.

The result is a human-centered experience that feels effortless from the moment you put it on. This guide previews how the systems work, why they boost presence and performance, and which headsets lead the market today.

Key Takeaways

- Immediate impact: Modern systems improve clarity and responsiveness.

- Intuitive interaction: Eyes act as natural inputs for smoother control.

- Proven tech: Leaders like Tobii bring decades of innovation to headsets.

- Practical benefits: Better visuals, comfort, and faster performance.

- Now available: These capabilities drive real-world use in entertainment and enterprise.

Why Eye Tracking Is Transforming VR Experiences Today

By translating where you look into actionable signals, modern systems let virtual spaces adapt instantly to your focus. This converts subtle pupil cues into interface inputs so scenes respond to your attention and feel more alive. Such responsiveness boosts immersion and makes experiences easier to navigate.

Directing compute to the center of your vision improves performance without sacrificing visual quality. Gaze-aware rendering concentrates GPU power where you look, raising frame rates and keeping images sharp across the field of view.

Comfort follows performance. Stable frame rates and smoother rendering reduce strain and let you stay engaged longer. Systems that sense focus also let interfaces prioritize what matters, lowering cognitive load and speeding up selection.

- Faster interactions: gaze-led selection and UI that anticipates intent.

- Richer gaming: believable NPC eye contact and flow-preserving controls.

- Actionable insights: better analytics about user engagement and attention.

These gains are shipping today on commercial devices, so truly responsive virtual reality is practical now. Read why the tech is a game-changer for headsets and how developers are already using it to raise immersion and usability.

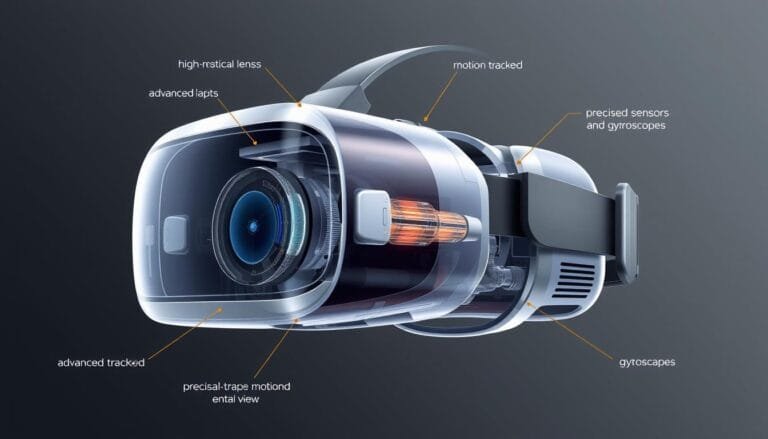

How Eye Tracking Works Inside a Headset

A precise optical stack converts brief reflections and dark pupils into stable gaze signals. Invisible infrared illumination lights the cornea while ring-mounted cameras capture high-speed images. Computer vision and machine learning then translate raw frames into low-latency, usable information for apps.

Infrared lights, ring-mounted cameras, and machine learning

The system pairs invisible IR LEDs with precision cameras that record corneal reflections and pupil centers. Algorithms measure tiny changes in distance and angle between those points.

That geometry—pupil-to-cornea reflection—lets software infer where the user is looking with millimeter-level accuracy. Signal filters and models keep output stable during head motion and varied lighting.

Vergence vs. gaze modeling in virtual scenes

In the physical world, eyes converge on a real focal point. In a virtual scene, displays sit very close to the face, so convergence cues can conflict with perceived depth.

The solution is to reconstruct the line of sight into the scene using object depth. Combining optical data with scene distance yields reliable gaze targets even when physical pointing and perceived depth differ.

Core outputs and what they reveal

- Pupil size: signals arousal and load.

- Gaze direction: pinpoints attention and selection intent.

- Eye openness: indicates blink rate and engagement.

Developers get stable streams of these metrics and must apply processes that respect privacy and context. Robust tracking technology, smart sensor fusion, and real-time signal processing make the data useful across lighting, motion, and distance changes.

Performance Unleashed: Foveated Rendering and Visual Fidelity

Smart rendering shifts detail to the exact spot you focus on, letting visuals stay crisp while saving compute. That change unlocks more graphical power without heavier hardware or higher thermals.

Dynamic foveated rendering to focus GPU/CPU where you look

Dynamic foveated rendering reallocates GPU and CPU work to the foveal region. The engine renders the center in high detail while lowering resolution in the periphery.

Unity reports real-world data showing up to 3.6x performance gains when gaze drives rendering budgets on a shipping system like PS VR2. That extra headroom lets developers add effects or raise resolution.

Dynamic distortion compensation for sharpness across the field of view

Dynamic distortion compensation adjusts lens correction in real time based on eye position. This tuning removes edge artifacts and keeps scenes sharp from center to rim.

The result is consistent graphical clarity and fidelity across the entire field of view, improving comfort for longer sessions.

High, stable frame-rates and smoother rendering for comfort and presence

These rendering advances work together to deliver steady frame rates and smoother motion. Higher fidelity and fewer artifacts sustain presence in virtual reality and reduce visual fatigue.

Developers should treat eye tracking technology as a performance multiplier. It benefits visuals, battery life, and thermal budgets while improving user comfort and immersion.

Immersion and Interaction: From Gaze to Intuitive Control

Micro-movements and glance timing let avatars behave like real people in shared scenes. These nonverbal cues—subtle shifts, eye contact, and blink patterns—build social awareness and a stronger sense of presence.

Avatars that mirror tiny movements create authentic human connection. When virtual faces reflect the same small signals as a real conversation, users feel seen and understood.

Gaze-as-input makes selection feel natural. Look to highlight a menu item, dwell to select, and get instant, low-friction response from the interface.

Attentive UI surfaces controls only when you focus on them, then clears away to preserve flow. That approach reduces cognitive load and helps users stay in the moment.

- Believable social presence: micro-movements around the eyes make avatars expressive.

- Seamless selection: gaze-driven UI speeds tasks with minimal training.

- Assistive onboarding: new users learn the way they already look and act.

- Meaningful feedback: subtle visual or haptic cues confirm intent without clutter.

Designers should map gaze, dwell, and small ocular gestures into forgiving controls that respect human limits. When gaze augments hands or controllers, complex tasks become faster and less tiring.

For a deeper look at interaction models and practical implementations, see this hands-on perspective on gaze-driven interfaces: gaze interaction in VR.

Research, Insights, and Analytics in Virtual Reality

Researchers use gaze data to map what captures attention and how people react in simulated worlds.

Gaze-based analytics as a window into cognitive processes

Gaze analytics give objective measures of what drew attention and for how long. These signals reveal intent, hesitation, and moment-to-moment focus.

That information powers hypothesis testing and helps teams refine content and interfaces with real behavioral evidence.

Adaptive training, simulation, MedTech, and EdTech applications

Data from controlled simulations supports adaptive training and personalized healthcare tools.

Developers use analytics to tune difficulty, tailor feedback, and improve therapeutic workflows in MedTech and healthcare applications.

What studies reveal about presence, learning, and behavioral safety

Evidence is nuanced: immersive presence can rise while learning gains do not always follow. One study found more presence but less learning in a lab simulation than a desktop alternative.

Driving simulations illustrate another benefit—comfort markers sometimes predict risky behavior, which informs safety design before real-world trials.

- Objective lens on cognition: precise measures of attention and response.

- Product refinement: insights guide UI changes and content improvements.

- Ethical data use: collect and interpret information with consent to protect trust.

Eye Tracking VR Headset Options and What to Look For

Picking a model is about matching ergonomics, software, and rendering features to real user needs. Look for devices that blend comfort and optical quality with robust developer tooling.

Standout devices and where they shine

- PlayStation VR2: great for gaming with polished SDKs and console integration.

- Vive Pro Eye: enterprise-ready optics and analytics support for research.

- Pimax Crystal: high-resolution visuals for simulation and prosumer use.

- Pico Neo 3 Pro Eye: untethered form factor for mobile training scenarios.

- HP Reverb G2 Omnicept: rich sensor suite for medical and enterprise research.

Buyer’s lens: features to prioritize

Comfort and optics come first—dynamic distortion compensation keeps clarity across the field and reduces long-session strain.

Calibration speed, robustness, and low latency matter for consistent performance. Test how quickly a model adapts to different users.

Evaluate rendering support for gaze-driven foveation, SDK maturity, analytics tooling, and privacy or on-device processing options to fit your organization.

“Choose a device that matches your users and use cases—balance fit, software, and sustained comfort.”

Implementation Playbook for Developers and Designers

Begin with a design brief that ties gaze features to measurable training or application outcomes. Define core tasks, success metrics, and acceptable comfort thresholds before coding.

Designing gaze-first interfaces and reducing visual strain

Map common flows and decide when glance selects and when a manual confirm is required. Use short dwell windows plus a subtle visual or haptic confirmation to avoid accidental activation.

Pair glance input with hands or controllers for compound actions: let eye movements speed selection and let hands perform precise manipulation.

For comfort, keep frame pacing stable, apply foveated rendering thoughtfully, and avoid high-contrast motion in the periphery to reduce fatigue.

Ethical data practices, privacy, and meaningful feedback loops

Treat gaze-derived signals as sensitive biometrics. Minimize collection, anonymize and store data securely, and default to on-device processing when possible.

Communicate clearly how data improves training and applications. Offer opt-in analytics and easy controls to pause or delete recorded sessions.

- Resilient rendering: implement graceful degradation when sensors fail—fallback to fixed high-quality center rendering.

- Calibration UX: short, guided steps with immediate feedback; allow re-calibration without leaving the session.

- Testing loop: run iterative studies with diverse participants and analyze eye movement metrics to refine UI and lower strain.

“Design for intent and comfort first—then optimize for speed and richness.”

Final approach: build features that help users achieve tasks faster while protecting privacy. Iterate with real users and measure both performance and comfort across builds.

Conclusion

By combining precise sensors with smart rendering, interactive worlds become both faster and emotionally richer.

Eye tracking and refined gaze models align rendering, input, and feedback to match how people naturally look and react. This raises performance through foveated rendering and deepens social presence via believable movements.

Choose devices with proven implementations—Tobii-enabled models like PS VR2 and Vive Pro Eye show what mature systems deliver. Design with empathy and protect sensitive data as you collect analytics.

Research keeps refining best practices so presence also drives real outcomes in learning and healthcare. Build responsibly and iteratively: when gaze guides the experience, the virtual world responds to you.